Index: Study Splunk

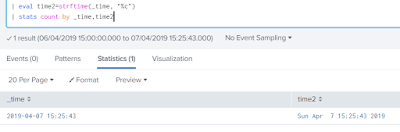

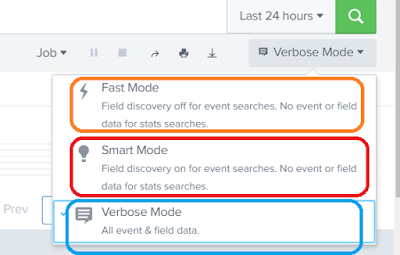

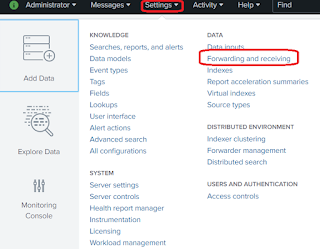

Want to learn about Splunk?, you came to the right spot ;) What does this blog contain so far? What is Splunk? Splunk Enterprise Components? Installing Splunk? Installing Splunk Universal Forwarder? Walkthrough of Splunk Interface Search Modes Searching in Splunk #1 Splunk sub(commands) [top, rare, fields, table, rename, sort] #2 Splunk sub(commands) [eval, trim, chart, showperc, stats, avg] #3 Splunk sub(commands) [eval, round, trim, stats, ceil, exact, floor, tostring] #4 Splunk sub(commands) [timechart, geostats, iplocation] #5 Splunk sub(Commands) [sendemail, dedup, eval, concatenate, new_field] #6 Splunk sub(Commands) [fields, rename, replace, table, transaction] Bringing data into Splunk Bringing data into Splunk (Continued...) Enable receiving port on Splunk server Dealing with Time Still I am in a process of writing couple of more topics related to Splunk, but you can go thru any of the links given above !! Do let me know if you have any